When humans learned to count, they probably started using their fingers as the first "digital" calculator. The abstract idea of using pebbles or sticks to represent quantities was the beginning of arithmetic and the number system we use today. Using pebbles, it is possible to add and subtract numbers quickly. If the pebbles are strung as beads on sticks or wires in a frame, it becomes an abacus , called a soroban by the Japanese. The abacus was probably invented in Western Europe and then exported to the Orient. Forgotten, or abandoned during the Dark Ages, it was reintroduced to Europe via the Arabs. In the abacus, each wire, with its beads, represents a positional number, the unit's column, the ten's column, etc. The beads can be rapidly used to add and subtract numbers. Multiplication can be handled by repetitive addition. This was the first mechanical calculator.

The introduction of Arabic numerals into Europe in the thirteenth century was important because of its introduction of the digit zero to represent an empty position in our symbolic representation of numbers. If you don't think the zero is important, try multiplying Roman numerals! Efficient bookkeeping in commerce and government was possible, but required large numbers of clerks who had to do all the arithmetic by hand. This was not only costly, but was also fraught with error.

While addition and subtraction are straight forward, multiplication and division require a great deal more work. These operations were made simpler by the development of the concept of logarithms by the mathematician John Napier in 1614. It is possible to multiply two numbers by adding exponents; for example, 2 2 x 2 3 = 2 2+3 = 2 5. Division can be done by subtraction of exponents. With logarithms, two numbers that are to be multiplied are first converted into powers of 10 and the exponents are then added. When 10 is raised to the power of the sum, the result is found. Compilation of logarithm tables made multiplication and division of long numbers much simpler. Later, William Oughtred invented the slide rule , a mechanical device that uses logarithms to simplify multiplication and division. The slide rule is an analog device ; the values are represented on a sliding scale and do not have discrete values. By adding and subtracting distances on scales, a trained user can multiply and divide quickly.

In 1642, Blaise Pascal developed a mechanical adding machine that used sets of wheels connected by gears. There was a wheel for each position, the one's column, the ten's and so on. By turning the wheels a number could be entered into the machine. Then by turning the wheels again, a number could be added or subtracted from the first. Carrying and borrowing were taken care of automatically by the gears. Although over fifty of these devices were made, they did not receive wide spread acceptance, especially by the clerks who feared being put out of work! Gottfried von Liebnitz, in 1674, devised a method whereby a machine could be made that would mechanically do multiplication and division. And then, in 1850, D.D. Parmalee patented a similar device that worked by pressing keys to turn the wheels and gears in the mechanical adding machine. This was the familiar "cash register". When the movement of the gears was electrified, the desk calculator was born.

The need for accurate logarithm tables used in navigation increased with expanded commerce with the Orient during the sixteenth century. And, the introduction of the cannon in warfare required trigonometry tables. Charles Babbage convinced the English government that a machine could make possible the production of error free tables for use by the Admiralty. Babbage designed an "Analytical Engine" which would be capable of any arithmetic operation, would be instructed by punched cards (an idea borrowed from the Jacquard loom), was capable of storing numbers, comparing values, etc. Although he spent all his fortune and worked for 37 years on the project, it was never completed. The design was too complex for a purely mechanical device and the workshops of the day were simply not able to manufacture gears with the proper tolerances. Babbage was ahead of his time, but he did envision the machine that we call a computer today.

Information stored on punched cards was used in the census of 1890 by Herman Hollerith who also borrowed the idea from the Jacquard loom. The stored information was then processed by mechanically collating, sorting, and summing the data. Hollerith was also responsible for the development of the Hollerith Code for encoding alphanumeric information as punched holes in a card. He went on to found International Business Machines (IBM).

In 1936, Alan Turing showed that any problem can be solved by a machine if it can be expressed as a finite number of steps that can be done by the machine. Computers are therefore sometimes called Turing machines.

Howard Aiken at Harvard University built the world's first computer in 1944. It was called the Mark I or ASCC (Automatic Sequence Controlled Calculator). The Mark I was an electromechanical machine, built to calculate cannon shell trajectories; it used relay switches. Programs and information were input by a punched paper ribbon. Aiken had managed to make the "analytic engine" that Babbage had envisioned. Unfortunately, the machine was very slow, taking seconds to multiply or divide six digit numbers. It was huge, weighed 5 tons, and contained 500 miles of wiring. The Mark I was a computer rather than a calculator because its function could be altered by changing its programming. A computer also has the ability to handle alphanumeric data as well as numbers and is capable of storing the data. One of the giants in the development of high level languages on digital computers was Grace Murray

Hopper (1906-1992), recruited by Aiken in 1943 to be the third programmer of the Mark I.

Starting in 1959 she led the design team team for the COBOL language in association with Sperry

Univac. Hopper was credited with coining the term "bug" in reference to a glitch in the

machinery.

Hopper (1906-1992), recruited by Aiken in 1943 to be the third programmer of the Mark I.

Starting in 1959 she led the design team team for the COBOL language in association with Sperry

Univac. Hopper was credited with coining the term "bug" in reference to a glitch in the

machinery.

The first bug was actually a moth which flew through an open window and into one of the

computer relays of the Mark I, temporarily shutting down the system. The moth was removed

and pasted into the logbook. From then on, if Hopper's team was not producing numbers, they

claimed to be "debugging the system". 1

The first bug was actually a moth which flew through an open window and into one of the

computer relays of the Mark I, temporarily shutting down the system. The moth was removed

and pasted into the logbook. From then on, if Hopper's team was not producing numbers, they

claimed to be "debugging the system". 1

A much faster computer than the Mark I was made possible by replacing the relays with electronic switches which rely on the movement of electrons rather than the slow physical movement of mechanical switches. The electronic switches used were vacuum tubes. John Mauchly and J. Presper Eckert at the University of Pennsylvania built the ENIAC (Electronic Numerical Integrator and Calculator) for the U.S. government to generate gunnery tables for use in the Second World War. Not finished until 1946, ENIAC contained 18,000 vacuum tubes, weighed over 30 tons, occupied 1600 square feet of floor space, and required 100 kilowatts of power. Heat dissipation was a problem and the vacuum tubes were not very reliable; when one burned out, it had to be replaced. This computer was programmed by rewiring control boards to do the desired functions. The ENIAC was much faster than the MARK I, capable of arithmetic operations in fractions of a second. It was said at the time that this one machine would keep all the world's mathematicians busy for over 200 years!

One difficulty that the ENIAC and MARK I had was that their design used decimal representation of numbers. John von Neumann, based on an idea by Claude Shannon, proposed that binary numbers would simplify design of computers. Since binary numbers require only two states to represent numbers, it is much easier to build the circuits, with electronic switches either on or off to represent the 1's and 0's of the binary system. Shannon also proposed the use of binary arithmetic to represent symbolic logic proposed in 1854 by George Boole. In symbolic logic, there are only two states, true and false, and several "operators" like AND and OR. Truth tables can be generated for

Two transistors connected in parallel could be used for binary addition. Logic

gates corresponding to the Boolean operators AND, OR, NOT, XOR, etc. can be constructed

from vacuum tubes or transistors. The logic gate for AND would have three transistors wired as

shown. The output (x) from such a circuit will be high voltage only when both the inputs (A & B)

AND gate are high voltage. Thus, by combining thousands of such simple electronic circuits a

computer can be constructed.

Two transistors connected in parallel could be used for binary addition. Logic

gates corresponding to the Boolean operators AND, OR, NOT, XOR, etc. can be constructed

from vacuum tubes or transistors. The logic gate for AND would have three transistors wired as

shown. The output (x) from such a circuit will be high voltage only when both the inputs (A & B)

AND gate are high voltage. Thus, by combining thousands of such simple electronic circuits a

computer can be constructed.

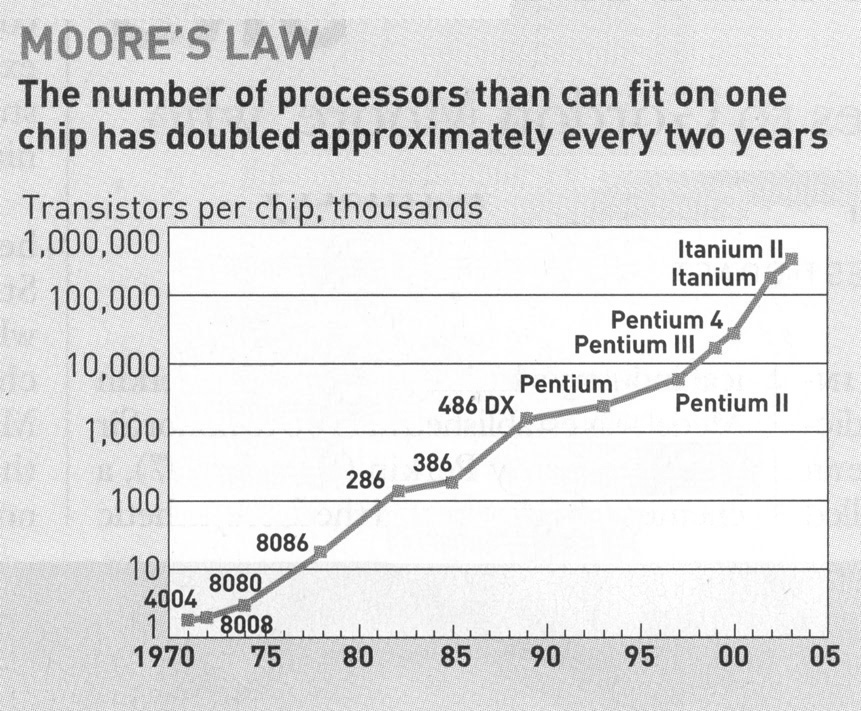

Computing power is directly connected to the number of individual transistors which can fit on a

microprocessor. When IBM chose the Intel 8088 microprocessor for the first IBM personal

computer in 1981 there were almost 100 times as many transistors per unit area as there had been

on the 4004 microprocessor just 10 years before. Gordon Moore, a founder of Intel, observed

that there appeared to be an exponential growth of component density on microprocessors. His

observation is now known as Moore's Law which states that microprocessor density doubles

every two years (revised from the original doubling period of 1 year). The diagram at the right

(copied from Chemical and Engineering News, September 13, 2004 , p. 26)

shows the density vs. year (as a logarithmic/linear plot) for the various microprocessors used in

microcomputers, starting with the 4004 in 1971.

Computing power is directly connected to the number of individual transistors which can fit on a

microprocessor. When IBM chose the Intel 8088 microprocessor for the first IBM personal

computer in 1981 there were almost 100 times as many transistors per unit area as there had been

on the 4004 microprocessor just 10 years before. Gordon Moore, a founder of Intel, observed

that there appeared to be an exponential growth of component density on microprocessors. His

observation is now known as Moore's Law which states that microprocessor density doubles

every two years (revised from the original doubling period of 1 year). The diagram at the right

(copied from Chemical and Engineering News, September 13, 2004 , p. 26)

shows the density vs. year (as a logarithmic/linear plot) for the various microprocessors used in

microcomputers, starting with the 4004 in 1971.